In PowerShell, loops are essential for iterating through data efficiently. However, improper use of loops, especially foreach, can lead to significant performance issues. In this post, we’ll look at a common pitfall when using foreach and how to correct it for optimal performance.

Incorrect Example

Let’s start with an inefficient use of foreach. In this example, the script takes much longer to execute due to the way arrays are handled in each iteration.

$Time = Measure-Command -Expression {

$Files = Get-ChildItem -Path 'C:\Program Files' -Recurse -ErrorAction SilentlyContinue

# Initialize an empty array

$LastWriteTime = @()

# Iterate through each file and add its LastWriteTime to the array

foreach($File in $Files)

{

$LastWriteTime += $File.LastWriteTime # Adding to the array using += is inefficient

}

}

$Time.TotalSeconds # Outputs the time taken for executionEvery time += is used, the array is recreated and copied, which is very inefficient for large datasets.

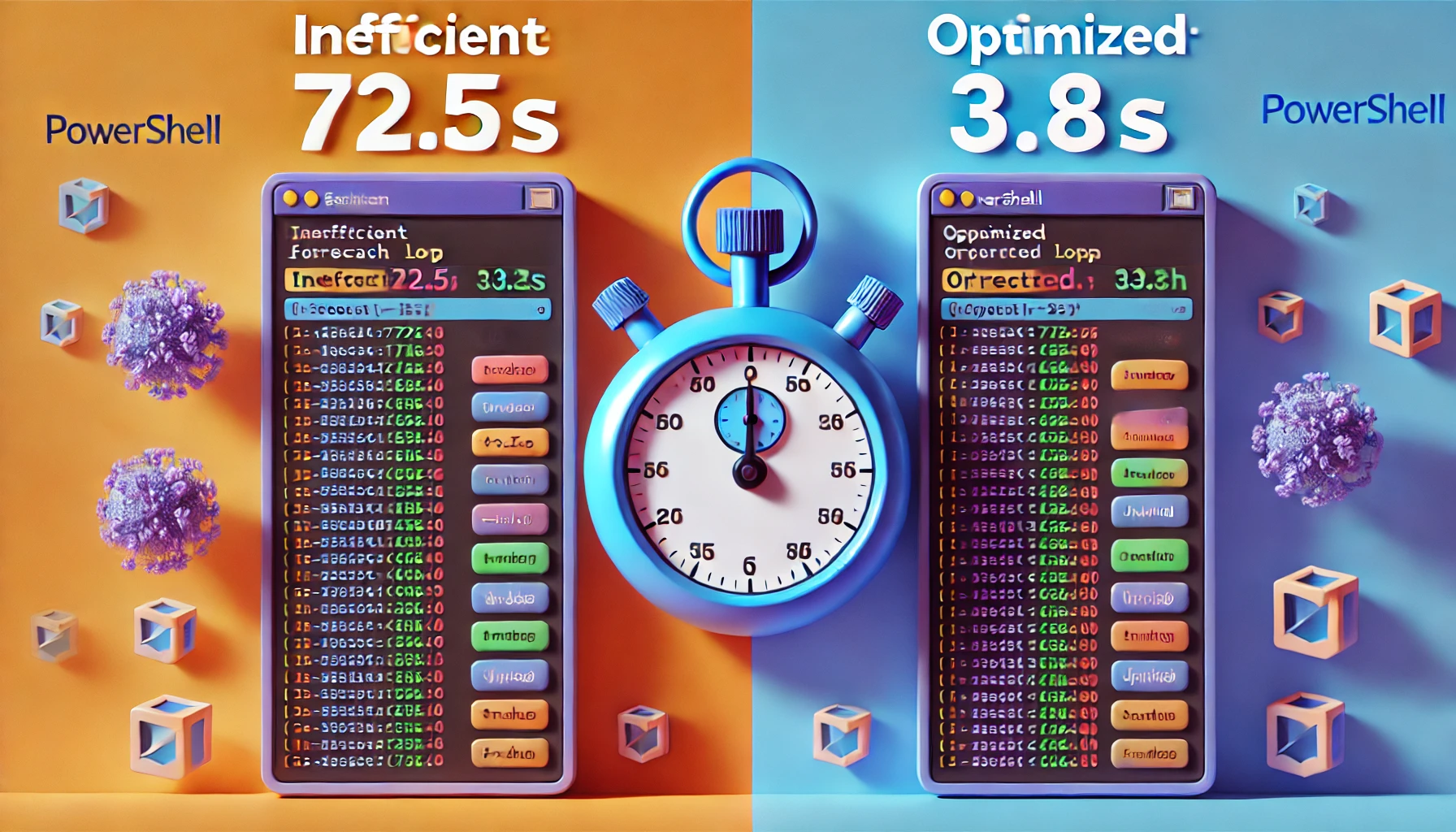

Result: 72.5 seconds for the execution

Correct Example

Now, let’s see how we can optimize the script by directly filling the array without using +=.

$Time = Measure-Command -Expression {

$Files = Get-ChildItem -Path 'C:\Program Files' -Recurse -ErrorAction SilentlyContinue

# Initialize an empty array

$LastWriteTime = @()

# Use foreach to directly populate the array with values

$LastWriteTime = foreach($File in $Files)

{

$File.LastWriteTime # Directly output the LastWriteTime for each file

}

}

$Time.TotalSeconds # Outputs the time taken for executionInstead of using +=, we populate the array directly by outputting the result within the foreach loop.

Result: 3.8 seconds for the execution.

Conclusion

This example demonstrates how a small adjustment in your PowerShell code can significantly reduce execution time. When dealing with large datasets or complex scripts, avoid inefficient practices like repeatedly copying arrays using +=. By using foreach more efficiently, you can greatly enhance the performance of your PowerShell scripts.

Nice post and very valuable. I made a small adjustment in your code, testing something. On my machine this gives a speed gain of 7% compared to your fastest. In my code I use a general list and do a .add()

my code:

$Time = Measure-Command -Expression {

$Files = Get-ChildItem -Path ‘C:\Program Files’ -Recurse -ErrorAction SilentlyContinue

# Initialize an empty List

[System.Collections.Generic.List[string]]$LastWriteTime = @()

# Iterate through each file and add its LastWriteTime to the List

foreach($File in $Files)

{

$LastWriteTime.add($File.LastWriteTime)

}

}

$Time.TotalSeconds # Outputs the time taken for execution

yep [System.Collections.Generic.List[string]] with .add (avoid new-object) is going to be the fastest